Enabling reproducible and agile full-system simulation

Paper on IEEE Xplore Paper Download (pre-print) Paper experiment data artifact Presentation Video gem5art Source Code gem5-resources

Zen and the art of gem5 experiments

We are excited to announce the gem5art project, a framework aiming to tackle the issue of reproducibility of gem5 experiments and improve the overall testing and experimental infrastructure of gem5.

The framework is written in Python3 and utilizes MongoDB for creating experiments database. gem5art is available for downloading through Python Package Index (PyPI, which is also known as pip).

To get started, you can download gem5art via pip with the following commands,

pip install gem5art-artifact gem5art-run gem5art-tasks

The Need for a Framework for gem5 Experiments

The gem5 simulator provides rich support for a variety of hardware models and configurations with high complexity. However, this complexity comes with a compromise. Having such a tremendous amount of possibilities of using gem5 results in a lack of a standard approach for running gem5 experiments, which in turn results in a steep learning curve for using gem5 as well as makes reproducing a gem5 experiment a non-trivial process.

Setting up gem5 full system simulation is one example of a highly non-trivial processes. A typical setup for a full system experiment requires the following inputs: a compiled Linux kernel, a disk image containing a Linux distribution and the desired benchmark suite that is compiled, a compiled gem5 executable and a gem5 system configuration. Each of the components of the above experiment is sensitive to time and environmental changes, and some of them require a significant amount of time and attention to correctly produce the input.

For example, gem5 requires a very specific Linux kernel configuration. Another example is creating a working disk image with an installed benchmark. A frequent approach is to use QEMU and manually typing commands to create such a disk image; this approach often requires several hours and lots of human attention and is error-prone. Another factor affecting the reproducibility of an experiment is the complier, which is frequently updated over time. Therefore, it is desirable to keep the artifacts that we used for experiments.

Unlike many other areas of science, we have the luxury of keeping carbon copies of the inputs to our experiments along with a certain degree of determinism in the experiment process. Thus, it is desirable to have a systematic approach for producing and documenting a gem5 experiment; more importantly, it is essential to have a standard approach for running gem5 experiments that allows throughout testing and reproducibility, both for ourselves and other researchers.

gem5art: Artifact, Reproducibility and Testing Framework for gem5

gem5art framework contains features simplifying the process of conducting structured gem5 experiments: allowing user to add important documentation (i.e. meta-data) to each component of a gem5 experiment, creating a database backing up the inputs and outputs of experiments, providing a way to detect duplicated experiments, providing sample Linux configurations, and providing a template for automating disk image creation.

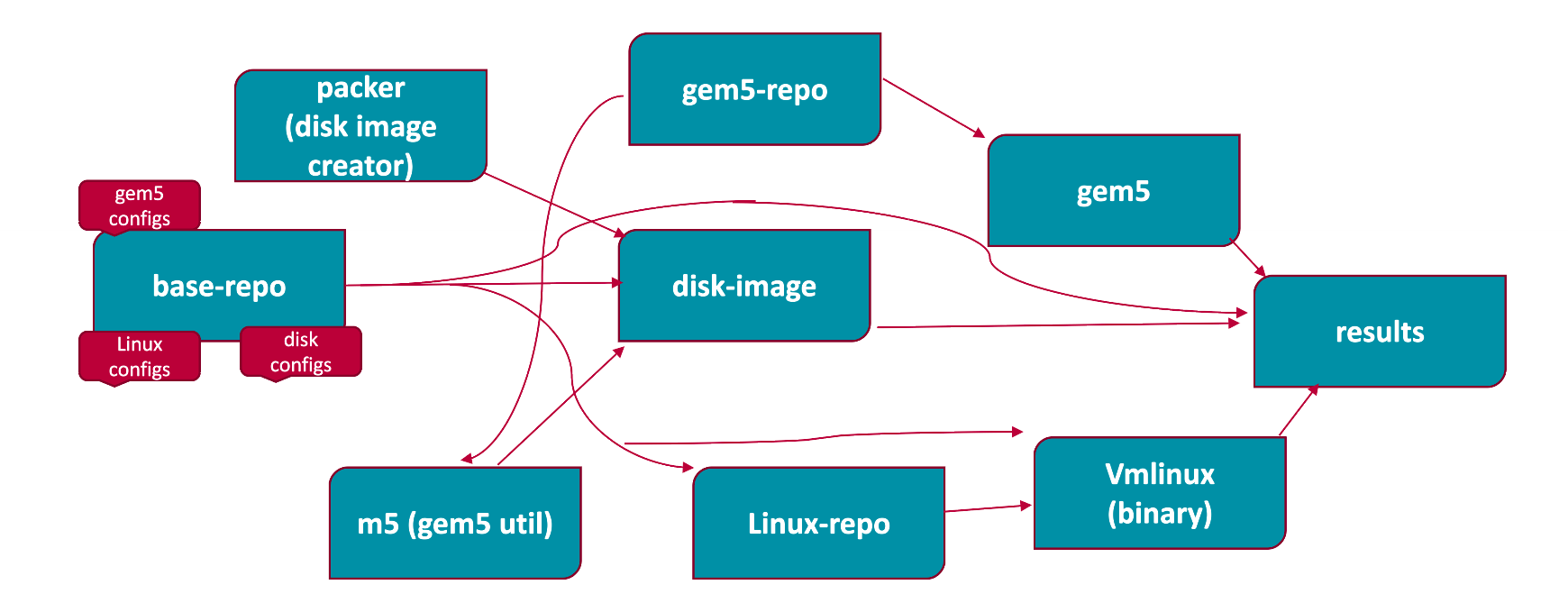

Each tile indicates a component of the experiment, where the components are represented as Artifact objects in a gem5art launch script. The launch script corresponding to the setup can be found here. The setup consists of the base repository, the gem5 repository and the Linux repository as the inputs; while the output component is results. The base repository contains the scripts (e.g. the launch script, the scripts for installing benchmark while building disk images) and the configs (e.g. gem5 configs, Linux kernel configs, Packer configs for building disk images). The gem5 repository and the Linux repository are the git repositories containing source code of the corresponding projects. The results component indicates the zipped file containing all outputs of the gem5 run. Other than the inputs and outputs, the intermediate artifacts are the gem5 binary, the m5 binary, the vmlinux binary and the disk images. The steps to create the intermediate objects are expected to be documented in the gem5art launch script. Each artifact is backed up to the database when it is registered: for git repositories, the git hashes are stored, while for other artifacts, the files are stored. Both git hashes and files are stored in the database.*

It is essential to document and backup every component of an experiment, and this improves reproducibility of an experiment. We define every input and output as a component (or an artifact as we call it in gem5art) of an experiment. By adding more meta-data (such as created/modified date/time, version of a binary/source code, commands/steps used to produce inputs/outputs) to every component, we subsequently have a system that automatically keeps track of the experiment instead of having to do it manually. As a result, we have well-defined inputs, and thus, a well-defined experiment. Adding meta-data also allows detecting duplicated experiments (which share the same input components), we avoid re-run experiments systematically instead of having to remember which experiments have been run, thus avoiding waste of time and hardware resource. gem5art also backs up the artifacts so that we can retrieve a binary or a git hash of a git repository used for an experiment.

gem5art also provides support for gem5 full system experiments setup. As mentioned, it requires a significant amount of modification to the default Linux configuration in order to have a Linux kernel that works with gem5. Our development repository provides known working configurations for a several version of Linux kernel. We also provide templates for creating disk images containing several commonly used benchmark suites, such as NAS Parallel Benchmarks, SPEC 2017, PARSEC. Please follow the tutorials for more information.

Citation

Bobby R. Bruce, Ayaz Akram, Hoa Nguyen, Kyle Roarty, Mahyar Samani, Marjan Fariborz, Trivikram Reddy, Matthew D. Sinclair, and Jason Lowe-Power, “Enabling Reproducible and Agile Full-System Simulation,” 2021 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), 2021, pp. 183-193, doi: 10.1109/ISPASS51385.2021.00035.

@inproceedings{bruce2021gem5art,

title={Enabling Reproducible and Agile Full-System Simulation},

author={Bruce, Bobby R. and Akram, Ayaz and Nguyen, Hoa and Roarty, Kyle and Samani, Mahyar and Fariborz, Marjan and Trivikram, Reddy and Sinclair, Matthew D. and Lowe-Power, Jason},

booktitle={In Proceedings of the 2021 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS '21)},

year={2021},

organization={IEEE},

pages={183-193},

doi={10.1109/ISPASS51385.2021.00035}

}

Comments