Welcome to the Davis Computer Architecture Research Group (DArchR) website

It’s an exciting time to be a computer architect! For the past 40 years, we’ve relied on Moore’s Law and related manufacturing advances for the meteoric increase in computer performance. However, these advances are approaching their physical limits. To increase the efficiency of our devices and enable novel applications we must architect new hardware and computing systems. Our research targets important end-to-end applications (e.g., big-data analytics, machine learning, and high-performance computing) and develops new hardware, software, and systems to improve their performance increase their scalability. Computing systems’ performance improvements have brought the world amazing things: smart phones, Google search, machine learning, and now it is up to computer architects to enable the next wave of revolutionary applications.

Recently published papers

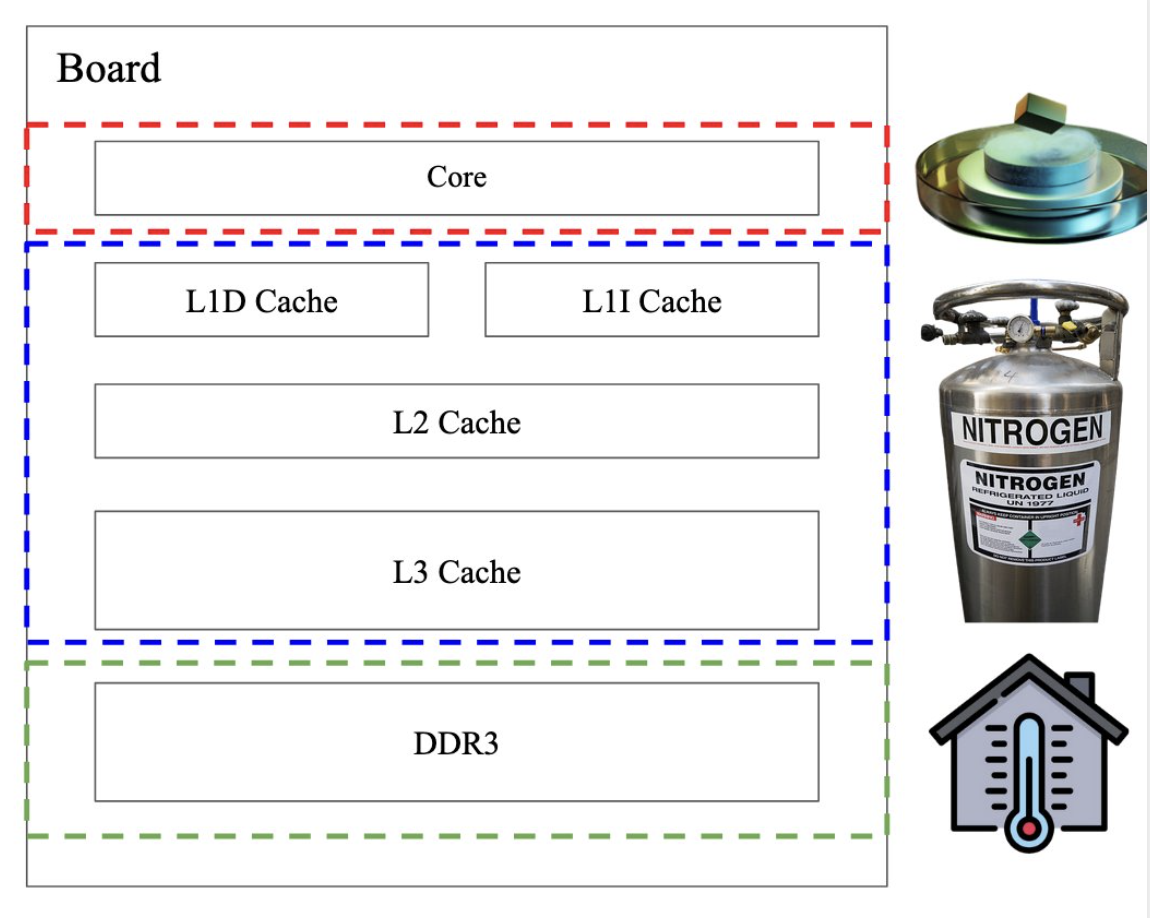

Potential and Limitation of High-Frequency Cores and Caches

ModSim 2024 poster-presentation on the potential of cryogenic semiconductor computing and superconductor electronics as promising alternatives to traditional semiconductor devices.

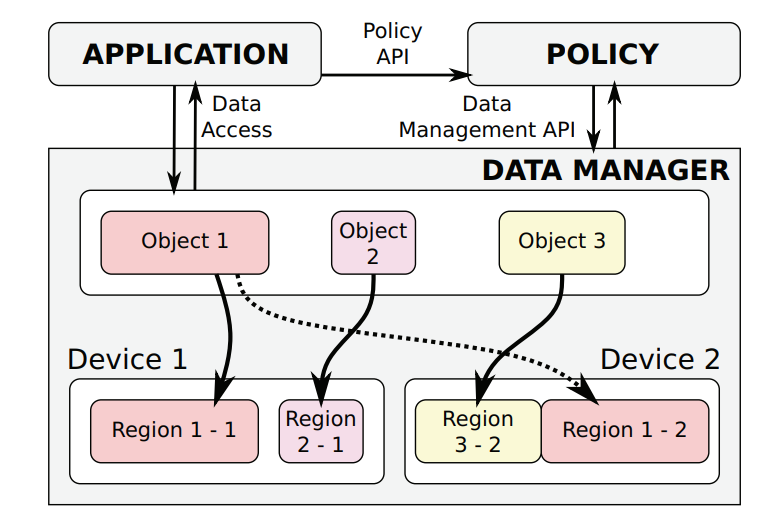

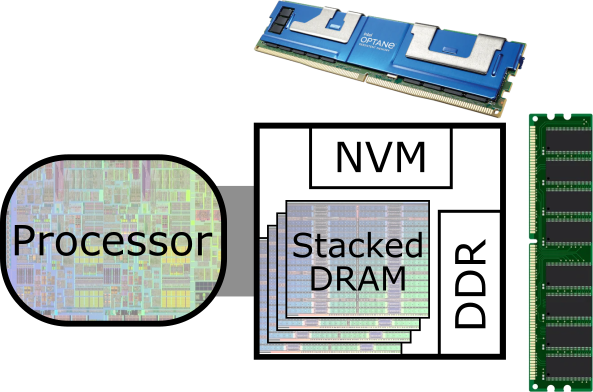

CachedArrays: Optimizing Data Movement for Heterogeneous Memory Systems

CachedArrays is a framework and API set for optimizing data tiering in heterogeneous memory systems by using programmer-provided semantic hints to enhance data placement, outperforming hardware caches in ML workloads.

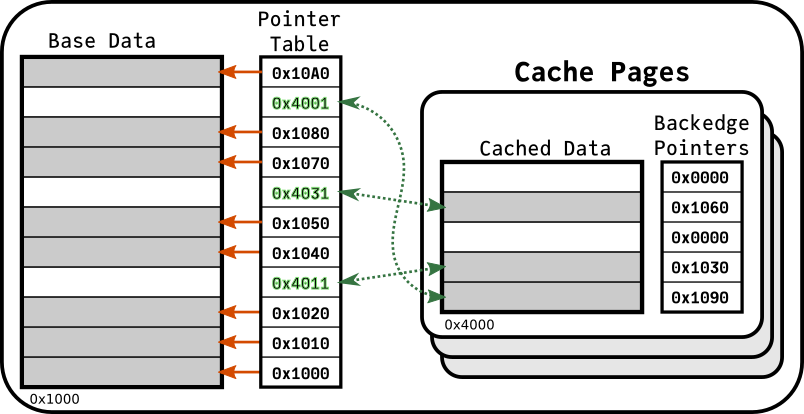

Efficient Large Scale DLRM Implementation on Heterogeneous Memory Systems

CachedEmbeddings is a data structure for efficient DLRM training on heterogeneous memory platforms, offering 1.4X to 2X speedup over top Intel CPU implementations by using an implicit software-managed cache integrated with Julia for optimized sparse table operations.

Featured projects

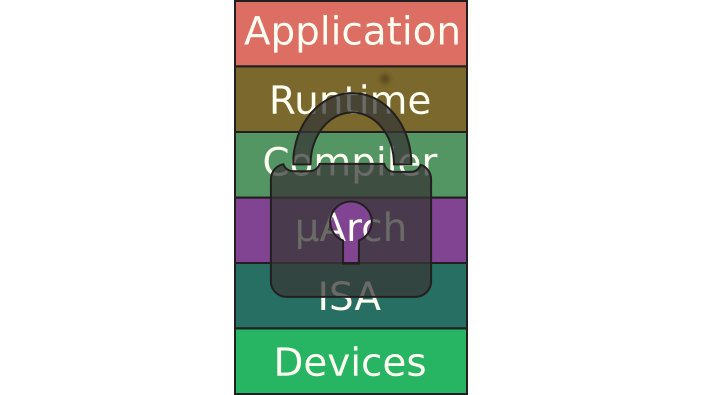

Hardware-software security interfaces

How can we design new hardware-software interfaces to allow for more secure devices?

Heterogeneous Memory

Cross-layer rethinking of the memory hierarchy for heterogeneous systems